In an era where technology is integrated into almost every aspect of our lives, it is no surprise that many people are turning to AI therapy tools for emotional support. With the rise of sophisticated chatbots and generative models, the idea of a digital therapist available 24/7 has become increasingly appealing. However, as we navigate this new frontier, it is essential to understand the profound differences between AI therapy and working with a trained mental health professional.

At Bona Fide Psychotherapy, we believe it is important to explore why treating AI therapy tools as a substitute for professional psychotherapy is a slippery slope that can lead to serious psychological, ethical, and safety risks. While these tools may offer convenience and accessibility, they lack the core human elements required for safe, effective, and ethical mental health care.

The Illusion of Empathy in AI Therapy and the Loss of Human Connection

One of the most significant risks of therapy using AI is the loss of genuine human connection. Therapy is not simply a conversation or an exchange of information. It is a deeply relational process built on empathy, attunement, trust, and the presence of a “human in the loop” (Canadian Mental Health Association [CMHA] , 2025).

AI therapy tools are designed to mimic human conversation, which can create a powerful illusion of empathy. This false sense of emotional connection may encourage users to share their most vulnerable thoughts with a machine that cannot truly care, understand, or respond with emotional depth. The CMHA (2025) emphasizes that authentic human connection is a foundational pillar of mental well-being, something AI therapy cannot replicate.

Unlike a licensed therapist, seeking therapy from AI lacks non-verbal awareness, emotional intuition, and the ability to read tone, body language, or silence. Psychology Today (2024) highlights that a therapist’s clinical intuition and real-time adaptability are essential for effective therapy. Relying solely on AI therapy for emotional validation may increase feelings of isolation, as users are ultimately interacting with a reflection of their own language patterns rather than a caring human presence.

The Slippery Slope of Generative AI Therapy and Hallucinations

Another critical concern with seeking therapy from AI lies in how generative AI systems function. These models are designed to predict language patterns, not to deliver clinically accurate or evidence-based mental health care. This creates the risk of “hallucinations,” where AI therapy tools produce confident-sounding but incorrect or misleading information (Harvard Business School, 2024).

In a mental health context, hallucinations within AI therapy can be especially dangerous. A user experiencing emotional distress may receive advice that is inappropriate, harmful, or clinically unsound. Thriveworks (2025) warns that fluency does not equal therapeutic quality. Because AI therapy lacks moral reasoning, accountability, and real-world consequences, it cannot reliably self-correct when it offers harmful guidance (Harvard Business School, 2024).

Without clinical oversight, AI therapy becomes a high-risk substitute for professional care, particularly for individuals navigating complex emotional or psychological challenges.

Clinical Risks of AI Therapy: Misdiagnosis and Crisis Management

One of the most serious dangers of AI therapy is the risk of misdiagnosis and inadequate crisis response. Mental health care requires nuanced understanding, individualized assessment, and clinical judgment, none of which current AI tools can reliably provide (PMC, 2024).

AI therapy often relies on generalized responses rather than personalized treatment, which can be harmful when users present with complex mental health histories. Case Western Reserve University (2025) notes that AI therapy lacks the ability to understand trauma, cultural context, developmental factors, and long-term patterns that are critical for accurate assessment.

Experts also warn that AI therapy may unintentionally reinforce harmful behaviors. For example, an AI tool may promote weight loss without recognizing signs of an eating disorder (Thriveworks, 2025). More critically, AI therapy is not equipped to manage crisis situations. Unlike a human therapist, AI cannot assess risk accurately, initiate emergency protocols, or provide immediate human intervention. In some cases, guardrails may abruptly end conversations, leaving users without support during moments of acute vulnerability (Thriveworks, 2025; Case Western Reserve University, 2025).

Ethical Concerns and Professional Boundaries in AI Therapy

The ethical implications of AI therapy are another major area of concern. Licensed therapists operate within regulated systems that prioritize client safety, confidentiality, and accountability. AI therapy , by contrast, exists outside of these ethical and legal frameworks.

Psychiatry Online (2025) emphasizes that while AI may support administrative or educational tasks, it should never replace professional clinical judgment. When individuals rely on AI therapy as a primary mental health resource, they are effectively opting out of regulated healthcare. There is no governing body to hold AI therapy accountable for harm, misguidance, or breaches of trust.

Data privacy is another serious issue. Thriveworks (2025) reminds users that AI therapy tools are not bound by HIPAA standards, and it is often unclear how sensitive mental health data is collected, stored, or shared. Psychiatry Online (2025) further notes that storing deeply personal information on corporate servers raises ethical and stigma-related concerns. Overreliance on AI for therapy risks creating a future where human care becomes a privilege, while automated systems manage increasingly complex mental health needs (Mental Health Journal, 2025).

The Future of Mental Health: AI Therapy as a Tool, Not a Replacement

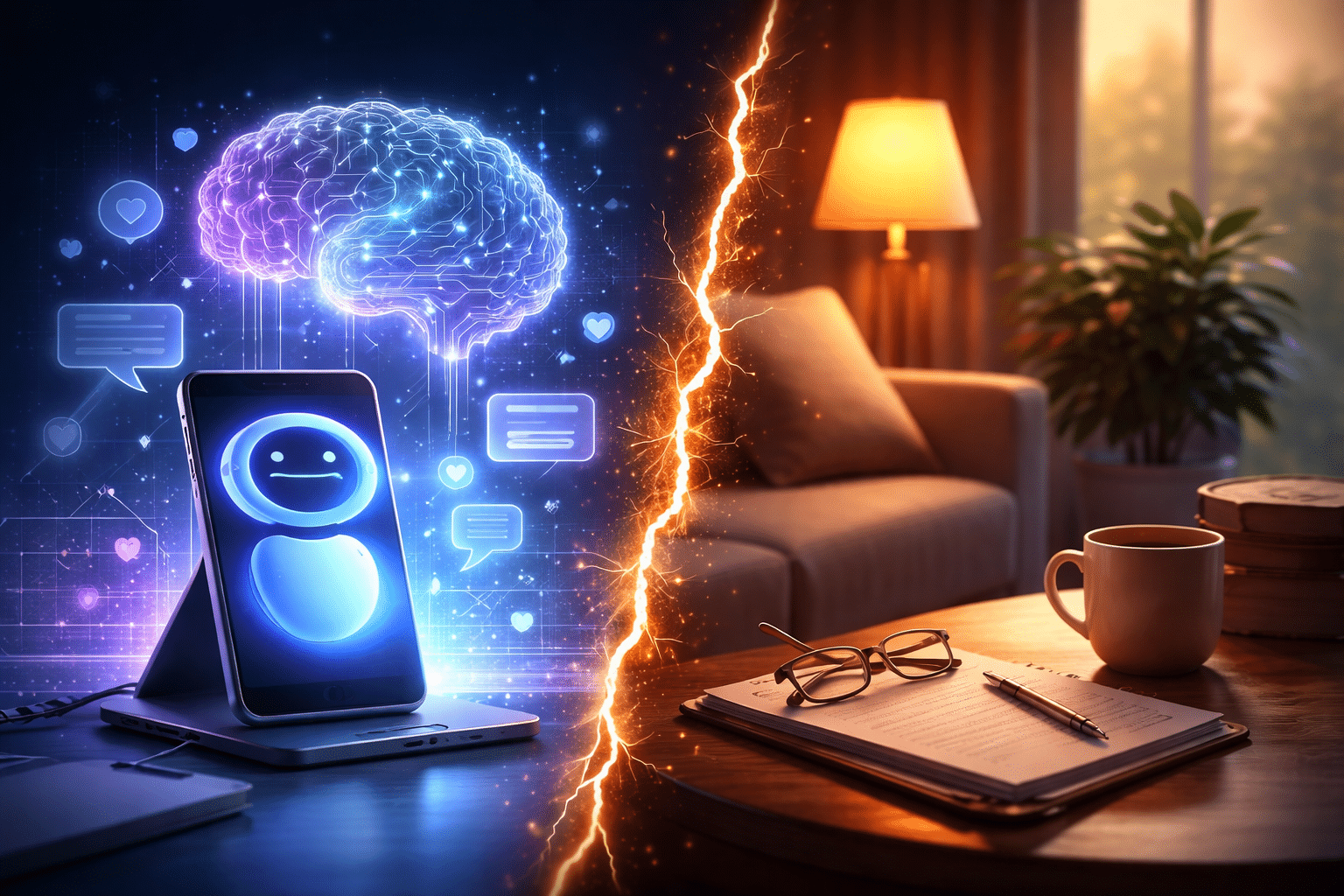

There is no doubt that technology will continue to shape the future of mental health care. When used appropriately, AI therapy tools can support mental wellness by helping users track moods, learn about therapy options, or organize thoughts between sessions (ScienceDirect, 2024; Thriveworks, 2025).

However, seeking therapy from AI must remain a supplement rather than a replacement. The deep, transformative work of therapy requires empathy, ethical responsibility, and human presence. The growing role of AI for therapy presents both opportunity and risk, offering accessibility while threatening to erode the human foundations of mental health care (Mental Health Journal, 2025).

If you are struggling, seeking professional help is an investment in your safety, privacy, and long-term resilience. AI therapy may offer information, but only a human therapist can provide accountability, ethical care, and genuine emotional connection.

Why AI Therapy Cannot Replace Human Therapists

The most important takeaway is this: AI therapy should be viewed as a supportive tool, not a clinical expert. While it can help organize thoughts or introduce coping concepts, it lacks the clinical training, ethical guardrails, and intuitive judgment of a licensed therapist.

Therapy using AI is designed to respond with agreeable language, but a therapist’s role is to challenge unhelpful patterns, support growth, and provide care grounded in evidence and ethics. Your mental health journey is deeply personal and far too complex for a one-size-fits-all algorithm.

Choosing a human therapist means choosing safety, accountability, and meaningful healing. Do not trade professional care for convenience. AI therapy may be clever, but it can never replace the depth, responsibility, and humanity of real psychotherapy.

To book a free 15-minute consultation with us, click here.

Keywords for SEO: mental health support, generative AI, seek professional help, mental well-being, future of mental health, personalized care, professional mental health practice, stigma, long-term resilience.

References: